There are typically two steps when trying to get a robot from point A to point B:

- Trajectory generation

- Trajectory tracking (or Control)

Before we dig into specific methods for trajectory generation, remember that the last post ended with us being able to take a set of points \(x\), \(y\), \(z\), \(\psi\) at some time \(t\), and obtaining the rest of our state variables based only on these points. This means that we only need to take into account the values of \(x\), \(y\), \(z\), \(\psi\) during our planning since they will allow us to solve for the rest of the state variables at any time in the trajectory. This reduces the number of state variables we have to plan in from 12 to 4 thus greatly simplifying the problem and proving why differential flatness is such a useful property.

Interpolation

The simplest way to generate a trajectory is by linear interpolation. Let’s say our drone is hovering at point \(x_0\), \(y_0\), \(z_0\), \(\psi_0\) at time \(t = 0\) and we want it to fly to \(x_1\), \(y_1\), \(z_1\), \(\psi_1\) at time \(t = 1\).

The straightforward linear interpolation algorithm is as follows: $$ x(t) = x_0 \cdot t + x_1 \cdot (1 - t) $$ $$ y(t) = y_0 \cdot t + y_1 \cdot (1 - t) $$ $$ z(t) = z_0 \cdot t + z_1 \cdot (1 - t) $$ $$ \psi(t) = \psi_0 \cdot t + \psi_1 \cdot (1 - t) $$ There are obvious issues with interpolation, such as how to plan around objects. However, in the case where you have a drone in an open field that you want to transit between certain waypoints, the simplicity of interpolation trivializes the trajectory generation step since the above equations (and their derivatives) are straightforward to implement.

Spline Trajectories

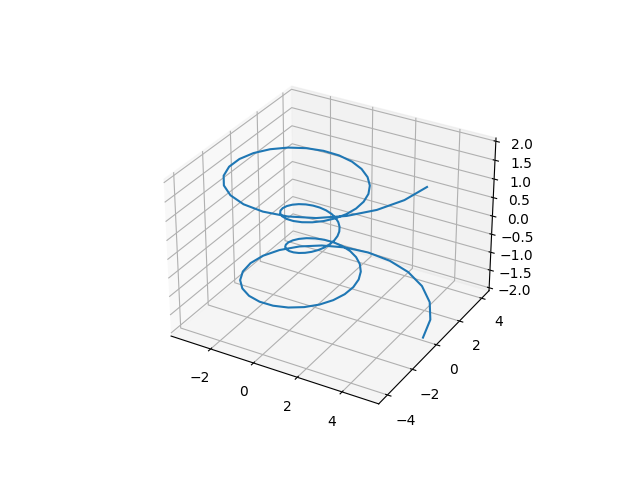

Linear interpolation works fine, but what if we want the trajectory to be smooth so that we don’t have jagged edges when we reach a waypoint and want to transition to another one? A cubic spline will help us achieve a smoother trajectory at the cost of a slightly more complex implementation.

Again, let’s say our drone is hovering at point \(x_0\), \(y_0\), \(z_0\), \(\psi_0\) at time \(t = 0\) and we want it to fly to \(x_1\), \(y_1\), \(z_1\), \(\psi_1\) at time \(t = 1\). In this case, we have: $$ x(t) = (2t^3 - 3t^2 + 1) \cdot x_0 + (t^3 - 2t^2 + t) \cdot m_0 + (-2t^3 + 3t^2) \cdot x_1 + (t^3 - t^2) \cdot m_1 $$ $$ y(t) = (2t^3 - 3t^2 + 1) \cdot y_0 + (t^3 - 2t^2 + t) \cdot m_0 + (-2t^3 + 3t^2) \cdot y_1 + (t^3 - t^2) \cdot m_1 $$ $$ z(t) = (2t^3 - 3t^2 + 1) \cdot z_0 + (t^3 - 2t^2 + t) \cdot m_0 + (-2t^3 + 3t^2) \cdot z_1 + (t^3 - t^2) \cdot m_1 $$ $$ \psi(t) = (2t^3 - 3t^2 + 1) \cdot \psi_0 + (t^3 - 2t^2 + t) \cdot m_0 + (-2t^3 + 3t^2) \cdot \psi_1 + (t^3 - t^2) \cdot m_1 $$ Where \(m_0\) is the slope at the starting point of the segment, (\(x_0\), \(y_0\), etc. depending on the equation) and \(m_1\) is the slope at the ending point of the segment.

In a data set with multiple points, you can do a three-point difference as described in [3] to find these values. You can find a deeper dive on cubic splines in [3].

RRT

Rapidly-exploring Random Trees (RRTs) use a random sampling method to create a graph that can be used for planning. Wikipedia has a great visualization of how an RRT is generated that I've included in Fig 2 above.

I want to be clear here: an RRT will not create the trajectory itself. Instead, it will give you a set of points in your state space that you can use to run something like A*, breadth first search, or some other algorithm to create the trajectory which can then be interpolated with any of the methods above.

You can think of the RRT algorithm as having 4 steps:

- Pick a random valid point \(P_k\) in the configuration space of the system

- Find the closest point to \(P_k\) from our set of previously chosen points. If \(k = 0\) then initialize your set of points to only have \(P_k\) and go to step 1, else continue to 3.

- Connect the closest point in your set to \(P_k\) and check that there aren’t any collisions. If there is a collision, then discard the point and go to step 1. If there is not a collision, then add \(P_k\) to your set of points.

- Repeat from step 1 until you have as many points in your set as you need.

The two subtle points with the standard RRT algorithm are how you find collisions and how you go about generating valid configurations. These will depend on how you are representing obstacles and how well you understand your system dynamics, but neither one is a huge endeavor. Just be mindful of them if you ever need to implement them.

There are many variations to the standard RRT algorithm described here that extend it to do things like bias the sample points towards a goal, handle dynamic environments, or try to generate an optimal solution based on some cost function. I won’t discuss those here but the relevant section on Wikipedia gives a succinct description of many of these variations.

Trajectory Optimization

Trajectory optimization solves the trajectory generation problem by turning it into an optimization problem as follows: $$ \begin{align*} \text{Minimize:} \quad & J = \int_{t_0}^{t_f} L(x(t), u(t), t) \, dt + \Phi(x(t_f)) \\ \text{Subject to:} \quad & \dot{x}(t) = f(x(t), u(t), t), \\ & x(t_0) = x_0, \\ & x(t_f) = x_f, \\ & g(x(t), u(t), t) \leq 0, \\ & h(x(t), u(t), t) = 0, \\ & u_{\text{min}} \leq u(t) \leq u_{\text{max}}, \\ & x_{\text{min}} \leq x(t) \leq x_{\text{max}}, \end{align*} $$ where:

- \(J\) is the objective function to be minimized, which typically includes an integral term representing the cost over time and a terminal cost \(\Phi(x(t_f))\).

- \(x(t)\) is the state vector at time \(t\).

- \(u(t)\) is the control vector at time \(t\).

- \(f\) represents the system dynamics.

- \(g\) and \(h\) represent inequality and equality path constraints, respectively.

- \(u_{\text{min}}\) and \( u_{\text{max}}\) are the bounds on the control inputs.

- \(x_{\text{min}}\) and \(x_{\text{max}}\) are the bounds on the state variables.

- \(t_0\) and \(t_f\) are the initial and final times, respectively.

In this section we will focus on a technique called direct collocation which discretizes the continuous problem before feeding it into a solver. As always, my goal is to give a high level overview so that the intuition comes through. For those who want a more in depth explanation of direct collocation, [2] is an excellent reference. I recommend that everyone reads [2] whether you implement direct collocation or not as it is an incredible introduction to using optimization techniques with dynamical systems.

Direct Collocation

As mentioned earlier, direct collocation works by turning the continuous trajectory optimization problem into a discrete one. The specific technique I’ll discuss is trapezoidal collocation since it makes the concept clear. For other techniques like Hermite-Simpson collocation, see [2].

Trapezoidal collocation turns the continuous problem into a discrete one by approximating the change in state defined by the system dynamics with a trapezoid quadrature: $$ \dot{x} = f $$ $$ \int_{t_k}^{t_{k+1}} \dot{x} \, dt = x_{k+1} - x_k \approx \frac{1}{2} \Delta t (f_{k+1} + f_k) $$ which are enforced on every segment of the trajectory. The objective function is similarly approximated with a trapezoidal quadrature as follows: $$ J = \int_{t_0}^{t_f} L(x(t), u(t), t) \, dt + \Phi(x(t_f)) \approx \sum_{k=t_0}^{t_f} \frac{1}{2} \Delta t (L_k + L_{k+1}) $$ Note that the terminal cost can be ignored when minimizing since it is constant.

Constraints are handled by enforcing them at each collocation point k in the trajectory. Note that constraints tend to be the trickiest aspect since there aren’t great ways of handling them and all it takes is one constraint to be violated for a problem to be infeasible.

Now that the problem is discrete, we can feed it into a solver which, assuming there is a solution and we have a good initial guess, will give us the control inputs and states across the entire trajectory. The only thing left is for us to interpolate each segment along the trajectory. Trapezoidal collocation does this by using a linear spline for the control inputs and a quadratic spline for the states.

So for the control in each segment \(k\) we have, $$ u(t) \approx u_k + \frac{t - t_k}{t_{k+1} - t_k} (u_{k+1} + u_k) $$ And for the state in each segment \(k\) we have, $$ x(t) \approx x_k + f_k (t - t_k) + \frac{(t - t_k)^2}{2(t_{k+1} - t_k)} (f_{k+1} - f_k) $$ I strongly recommend looking into [2] if you want to dive into trajectory optimization.

Now What?

One last thing I want to mention is that the techniques earlier in this chapter output a trajectory in the robots state space which then has to be tracked by a controller. However, trajectory optimization outputs a trajectory as well as a set of control inputs that can execute that trajectory. This begs the question: why don’t we just tell the drone to execute the control inputs and ignore the generated trajectory? This is in fact a popular approach often referred to as Model Predictive Control but there are some subtleties and variations which I will discuss in the next post relating to control.

Regardless of which of the above techniques we use, we now have a way to generate trajectories for our robots. But how do we turn these trajectories into control inputs that our robot understands? That’s the topic of the next post. See you there.

References

[1] Russ Tedrake. Underactuated Robotics: Algorithms for Walking, Running, Swimming, Flying, and Manipulation (Course Notes for MIT 6.832). Downloaded on 9/17/2022.

[2] Kelly, M. (2017). An Introduction to Trajectory Optimization: How to Do Your Own Direct Collocation. SIAM Review, 59(4), 849-904.

[4] By Javed Hossain - Own work, CC BY-SA 3.0, source